For years, most sophisticated AI operations relied heavily on powerful cloud servers, demanding constant internet connectivity and raising concerns about data privacy. However, a seismic shift is underway. Major chip manufacturers are now embedding robust AI processing capabilities directly into consumer hardware, fundamentally altering the landscape of personal technology. This includes a new generation of CPUs and System-on-Chips (SoCs) from industry giants like Qualcomm, Intel, Apple, and MediaTek, all featuring dedicated NPUs designed to handle complex AI workloads with remarkable efficiency.

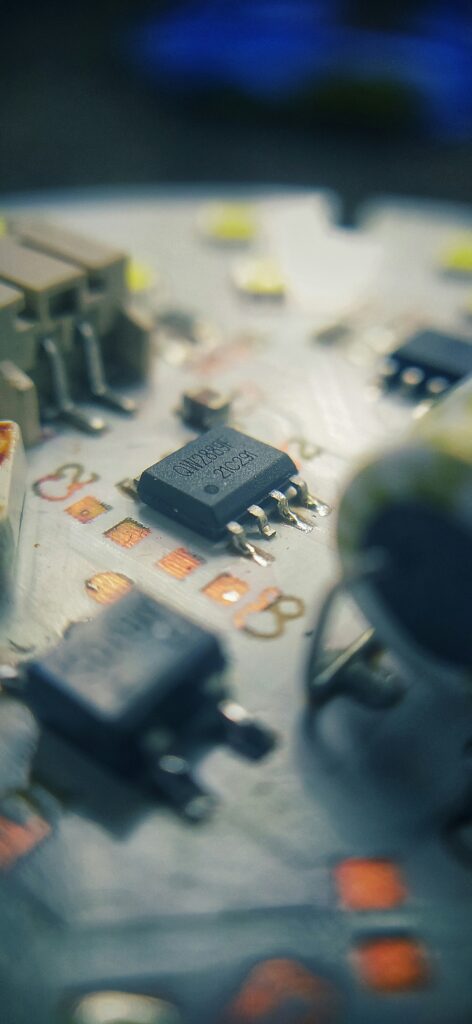

Recent announcements underscore this trend. Qualcomm’s Snapdragon X Elite, designed for Windows PCs, boasts a powerful NPU capable of 45 TOPS (Trillions of Operations Per Second), promising to transform laptops into ‘AI PCs’. Similarly, Intel’s Core Ultra processors (Meteor Lake) integrate an NPU to power AI-centric features directly on the device, enabling capabilities like real-time background blur in video calls or enhanced noise cancellation without draining battery life. Apple’s M-series chips have long led the pack with their integrated Neural Engine, powering advanced computational photography and on-device machine learning tasks across its ecosystem. This move to **on-device AI chips** marks a commitment from leading innovators to bring AI closer to the user.

The Data Behind the Revolution: Efficiency and Privacy at Its Core

The impetus behind this rapid integration of NPUs is manifold. A report from industry analyst TechCrunch highlights the significant advantages: processing AI tasks locally reduces latency, improves responsiveness, and, crucially, enhances user privacy by keeping sensitive data on the device rather than sending it to the cloud. Moreover, NPUs are purpose-built for AI computations, making them far more energy-efficient than general-purpose CPUs or even GPUs for specific machine learning tasks. This efficiency translates directly into longer battery life for devices, even while running complex AI applications.

Market research further supports this trajectory. Analysts predict a massive surge in devices equipped with dedicated AI hardware. These **AI chips in gadgets** are not just about processing power; they are about enabling entirely new categories of applications and user experiences. Imagine a smartphone that can transcribe live conversations with perfect accuracy in multiple languages instantly, or a laptop that can optimize its performance based on your workflow patterns using on-device intelligence. These capabilities are becoming a reality.

Transformative Impact on Industry and User Experience

The widespread adoption of **on-device AI chips** will have profound implications across various sectors. For the gadget industry, it redefines performance metrics, shifting focus from raw CPU speed to AI processing prowess. Developers are already gearing up to leverage these new capabilities, creating a new wave of ‘AI-native’ applications that take full advantage of local processing. This means more personalized, responsive, and secure experiences for users. For instance, enhanced generative AI features for image editing, content creation, or personalized virtual assistants could run seamlessly without an internet connection.

Beyond personal devices, this trend is also influencing other edge computing applications, from smart home devices to industrial IoT. The ability to perform complex inference tasks locally opens up possibilities for greater autonomy and real-time decision-making, reducing reliance on centralized cloud infrastructure. For a deeper dive into how this plays out in broader AI trends, read our recent article on The Rise of Edge AI.

Future Horizons: The Dawn of Truly Intelligent Gadgets

Experts widely agree that we are only at the beginning of the on-device AI era. Industry pioneers like Cristiano Amon, CEO of Qualcomm, envision a future where all new devices will inherently be AI-enabled, fundamentally changing how we interact with technology. This shift moves beyond mere automation, promising to deliver proactive, predictive, and context-aware assistance. The next generation of gadgets won’t just respond to commands; they will anticipate our needs, learn from our habits, and offer personalized experiences that were once confined to science fiction.

While the potential is immense, challenges remain, including optimizing software frameworks to efficiently utilize these NPUs and ensuring that the increased local processing power doesn’t inadvertently introduce new security vulnerabilities. Nevertheless, the trajectory is clear: **on-device AI chips** are set to make our gadgets not just smarter, but truly intelligent companions, reshaping the very fabric of our digital lives.