The Dawn of Ubiquitous On-Device AI

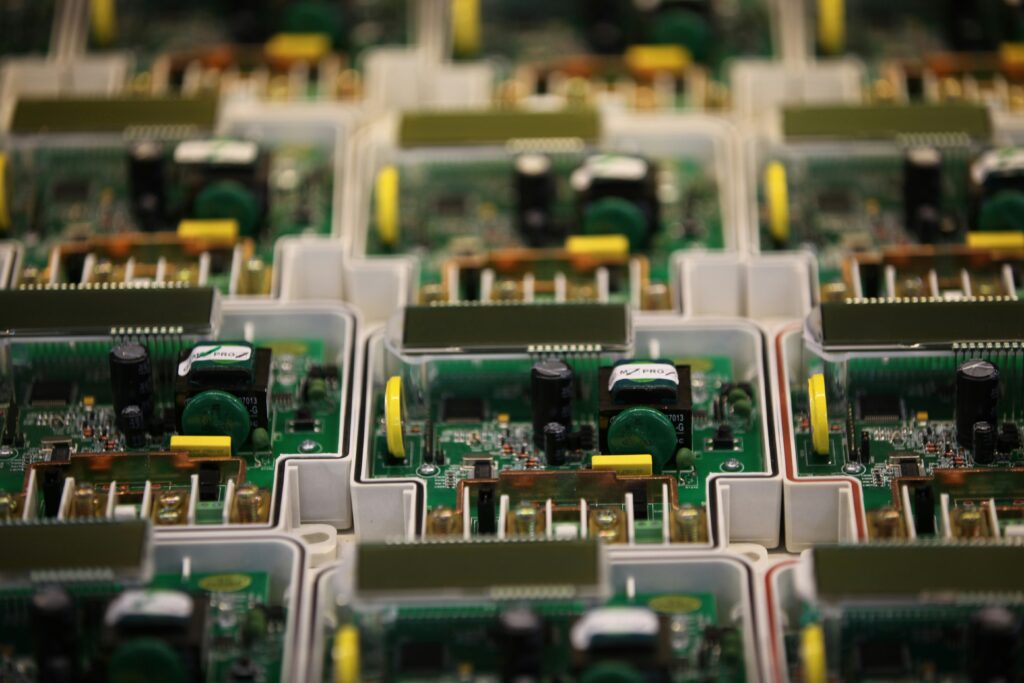

The tech world is witnessing a pivotal shift: the era of ubiquitous on-device AI. Major industry players like Apple, Qualcomm, Intel, and Nvidia are relentlessly innovating, embedding increasingly powerful Neural Processing Units (NPUs) and AI accelerators directly into their latest chipsets. This strategic move, prominently seen in recent product launches such as Apple’s A17 Pro, Qualcomm’s Snapdragon 8 Gen 3, and Intel’s Core Ultra processors, signifies a departure from solely cloud-centric AI to a more distributed, edge-based paradigm. The rationale is clear: by processing AI tasks locally, devices can achieve lower latency, bolster privacy by reducing reliance on data transmission to remote servers, and offer superior performance for demanding AI applications without constant internet connectivity. This is not merely an incremental upgrade but a foundational change in how hardware interacts with intelligence.

Unpacking the Market: Growth and Investment in AI Silicon

The market for specialized **AI chips** is experiencing an explosive growth trajectory, attracting massive investments and fostering intense competition among semiconductor giants. According to a recent report by Statista, the global AI chip market is projected to reach over $100 billion by 2027, driven primarily by the escalating demand for advanced edge computing capabilities and the integration of generative AI features across various devices. This significant financial commitment underscores the industry’s belief in on-device AI as the next frontier for technological innovation.

The immediate impacts on consumer electronics are profound and rapidly materializing. In smartphones, advanced AI chips enable lightning-fast image processing, real-time language translation with remarkable accuracy, and more sophisticated, context-aware voice assistants. Furthermore, generative AI features, once confined to powerful data centers, are now executing directly on handsets, allowing for on-the-fly content creation and personalization. Laptops equipped with these new processors benefit from enhanced video conferencing, AI-powered productivity tools that can optimize workflows, and extended battery life through more efficient AI task offloading. Beyond personal computing, AI at the edge is empowering IoT and smart home devices with greater autonomy, enabling local data processing for heightened security, faster responses, and reduced bandwidth consumption.

The Strategic Implications for Industry and Consumers

This shift to on-device AI has far-reaching strategic implications for both industry and everyday consumers. For hardware manufacturers, it’s a race to develop the most efficient and powerful AI silicon, creating new benchmarks for performance and energy efficiency. It also pushes innovation in software development, fostering new ecosystems for AI applications that can leverage the local processing power. Crucially, the move towards on-device AI enhances user privacy by minimizing the amount of sensitive data sent to the cloud, giving users more control over their personal information and reducing vulnerabilities to data breaches.

Industry analysts, such as those at IDC, predict that within the next five years, nearly every new computing device—from smartwatches to high-end servers—will feature dedicated AI acceleration. This transformation is poised to redefine user experience, enabling truly personalized and adaptive computing environments that can anticipate user needs and execute complex tasks with unprecedented speed. However, challenges remain, including the need for greater power efficiency in chip design, the development of robust and accessible developer tools for on-device AI, and the establishment of industry-wide standardization to ensure seamless integration across diverse platforms. For a broader look at how AI is shaping our world, explore our article on The Future of AI: Key Trends to Watch in 2024.