In recent months, the world has witnessed an astonishing acceleration in Artificial Intelligence capabilities, particularly with the rise of multimodal AI. No longer confined to processing a single type of data, cutting-edge AI models can now simultaneously interpret and generate information from diverse sources—be it text, images, video, or audio. This convergence represents a paradigm shift from traditional unimodal systems, exemplified by giants like Google’s Gemini and advancements within OpenAI’s GPT-4V, which are demonstrating unprecedented prowess in contextual understanding. For instance, these systems can analyze a photograph, comprehend the objects and activities within it, and then generate a descriptive narrative or answer complex questions about the scene, bridging the gap between perception and language in a way previously thought impossible for machines.

The Data Revolution: Enhanced Comprehension Through Multimodality

This leap in AI capabilities isn’t merely about processing more data; it’s about processing it holistically, leading to a deeper and more nuanced understanding of complex real-world scenarios. Leading research institutions and tech giants are consistently publishing findings that highlight the superior performance of multimodal architectures. Studies, such as those often presented at top-tier AI conferences like NeurIPS or AAAI, indicate significant accuracy boosts—sometimes up to 30%—in tasks requiring cross-modal reasoning, compared to their unimodal predecessors. By integrating information from different sensory inputs, these models can resolve ambiguities that a single data stream might present. For example, an AI assessing a medical image can combine visual cues with a patient’s textual history and even audio notes from a doctor, leading to a more accurate diagnosis and a more comprehensive understanding of the patient’s condition.

Transformative Impact Across Key Industries

The implications of multimodal AI extend far beyond academic research, poised to revolutionize numerous industries. In healthcare, AI can analyze X-rays, MRI scans, and pathology reports alongside electronic health records and even spoken symptoms, assisting doctors in faster, more precise diagnoses and personalized treatment plans. The ability to cross-reference vast amounts of disparate medical data points offers a new frontier in diagnostic accuracy. Similarly, in education, multimodal AI is paving the way for highly interactive and personalized learning experiences. Imagine an AI tutor that can explain a complex scientific concept using a combination of diagrams, animated videos, text, and spoken language, adapting its teaching style based on a student’s real-time engagement and comprehension levels. This dynamic approach promises to make learning more accessible and engaging for diverse learners. Explore more about the future of generative AI in various sectors here.

Enhancing Accessibility and Human-Computer Interaction

Beyond industrial applications, multimodal AI holds immense potential to enhance daily life and foster greater inclusivity. It can power highly sophisticated virtual assistants that not only understand spoken commands but also interpret visual cues from the user’s environment, leading to more natural and intuitive interactions. For individuals with disabilities, multimodal AI is a game-changer. For example, a visually impaired person could receive real-time, detailed audio descriptions of their surroundings or a video they are watching, generated by AI. Conversely, for those with hearing impairments, AI could convert sign language input into spoken words or text, breaking down communication barriers. This evolution moves us closer to a world where technology seamlessly adapts to human needs, rather than the other way around.

The Road Ahead: Predictions and Ethical Considerations

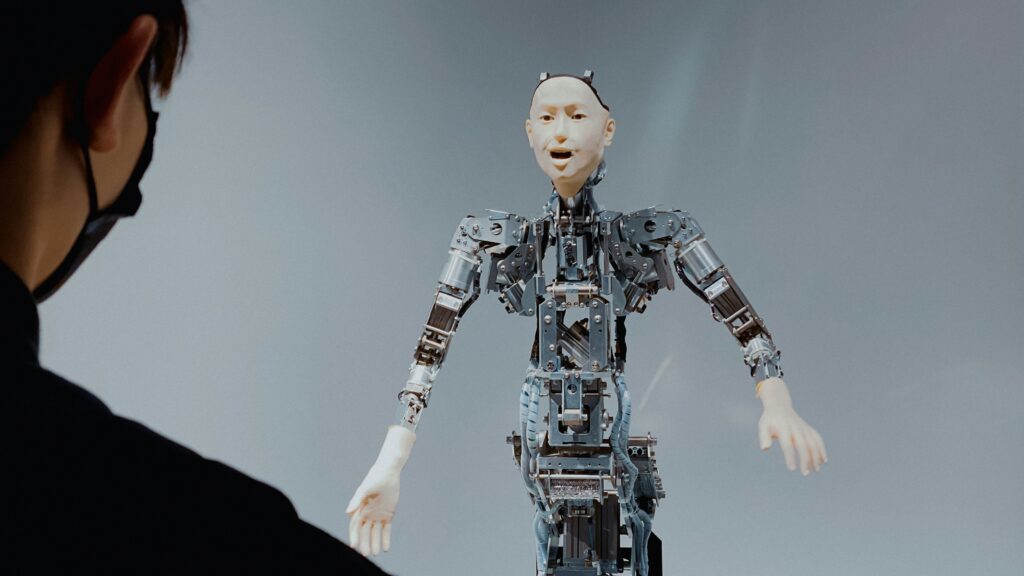

Looking to the future, experts widely predict that multimodal AI will become an indispensable component of virtually all advanced technological systems. We can anticipate more context-aware AI assistants, hyper-personalized content creation, and even sentient-like robotics capable of navigating and interacting with the world in a profoundly human-like manner. However, this rapid advancement also brings critical ethical considerations. Ensuring fairness, transparency, and accountability in multimodal models—especially concerning data bias and potential misuse—is paramount. As this powerful branch of machine learning continues to evolve rapidly, responsible development and robust ethical guidelines will be crucial to harness its full potential for the betterment of society, ensuring these revolutionary tools serve humanity wisely.